LLMs cannot process 3D models directly

If you ask Claude, ChatGPT, or Gemini about a glb/glTF file, you quickly discover that they have no way to visualize the 3D model. Some LLMs can answer questions about the size of the model or can guess what it is based on the mesh/texture names in the glTF JSON.

Asking ChatGPT about an attached glb file

Asking ChatGPT about an attached glb fileThe Solution: LLMs excel at image analysis

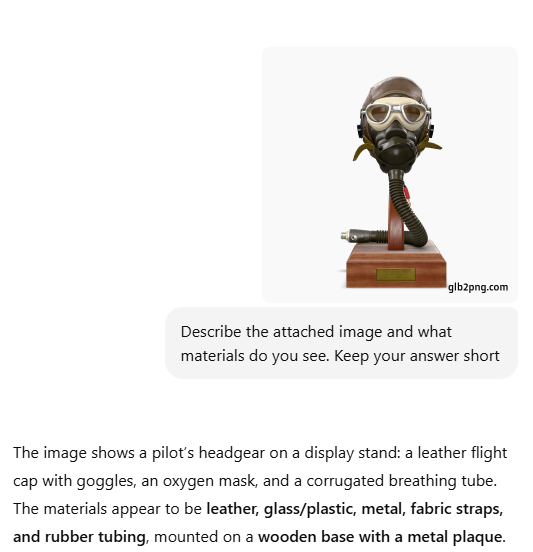

On the other hand, if you ask an LLM to describe an image or ask concrete questions about the image, it does a pretty good job.

ChatGPT describes an attached image

ChatGPT describes an attached imageImplementation strategy

For this demonstration we'll use OpenAIs image API to ask questions about the Flight Helmet demo model from Khronos Group.

Generating screenshot URLs for analysis

First, we'll define the two screenshots we want to use to generate the input images for the LLM:

const screenshotUrl1 = `http://glb2png.com/v1/${GLB2PNG_API_KEY}/r/rv:0,rh:0,s:256/u/${GLB_URL}`

const screenshotUrl2 = `http://glb2png.com/v1/${GLB2PNG_API_KEY}/r/rv:45,rh:-90,s:256/u/${GLB_URL}`

GLB2PNG_API_KEY is the API Key from your GLB2PNG dashboard and GLB_URL is the public URL to your actual glb/glTF file. In the case of our demo, GLB_URL is the Flight Helmet we have seen above:

const GLB_URL = 'https://raw.githubusercontent.com/KhronosGroup/glTF-Sample-Models/refs/heads/main/2.0/FlightHelmet/glTF/FlightHelmet.gltf'

screenshotUrl1 with rv (rotation vertical) and rh (rotation horizontal) set to 0

screenshotUrl1 with rv (rotation vertical) and rh (rotation horizontal) set to 0 screenshotUrl2 with rv:45 (45 degrees up) and rh:-90 (90 degrees to the left)

screenshotUrl2 with rv:45 (45 degrees up) and rh:-90 (90 degrees to the left)Defining structured output as JSON schema

const imageAnalysisJsonSchema = {

type: "object",

properties: {

description: {

type: "string",

description: "Brief description of the model shown in the images"

},

materials: {

type: "array",

items: { type: "string" },

description: "List of materials that can be seen in the images, materials only"

},

nsfw: {

type: "boolean",

description: "True if the images contain nudity, violence, or drugs"

}

},

required: ["description", "materials", "nsfw"],

additionalProperties: false

};

As you can see above, we want a short description of the 3D model, a list of used materials, and an nsfw flag that tells us if the model contains nudity, violence, or drugs.

Calling the OpenAI API

const model = "gpt-4o-mini";

const response = await openai.chat.completions.create({

model,

messages: [

{

role: "user",

content: [

{ type: "text", text: "Analyze this image and extract details according to the schema." },

{ type: "image_url", image_url: { url: screenshotUrl1 } },

{ type: "image_url", image_url: { url: screenshotUrl2 } },

],

},

],

response_format: {

type: "json_schema",

json_schema: {

name: "imageAnalysisSchema",

schema: imageAnalysisJsonSchema

}

}

});

const result = JSON.parse(response.choices[0].message.content);

We call the API and give it the desired JSON schema (imageAnalysisJsonSchema) and our screenshot URLs (screenshotUrl1 & screenshotUrl2).

And as a result, we get the following JSON:

{

"description": "A model of a vintage gas mask with attached headgear, mounted on a wooden base.",

"materials": [

"rubber",

"metal",

"wood",

"glass",

"fabric"

],

"nsfw": false

}

Cost analysis

In the demonstration, we use gpt-4o-mini and calculated the used tokens based on the response and the pricing table.

const model = "gpt-4o-mini";

const pricePrompt = 0.8 / 1_000_000;

const priceCompletion = 3.2 / 1_000_000;

console.log('Price', `${((response.usage.prompt_tokens * pricePrompt + response.usage.completion_tokens * priceCompletion) * 100).toFixed(6)} Cent USD`)

As of the date of publishing the price of running this call was: Price 1.386160 Cent USD

Use cases

- Categorization and tagging: Automatically categorize (e.g. animal, furniture, person, product, ...) or tag (e.g. red, metal, gaming-device, ...) certain 3D models

- Content safety and compliance: Detect if a user uploaded/generated a 3D model that contains nudity or sexual content

- Inappropriate content: If you run an animal database, you may want to prohibit users from uploading 3D models that are not animals

Try it yourself

Access the complete source code and clone the project on Github